Lecture 2: Camera

Overview

Our ray tracing task requires us to:

- Compute a “viewing ray” for each pixel in the output image,

- Find the closest intersection point in the scene

- Set the pixel value based on material (and later, on lighting)

So what is a ray?

A starting point and a direction (normalized)

The ray is represented parametrically using for time:

In general, we test for intersections against geometric objects by solving for valid values of

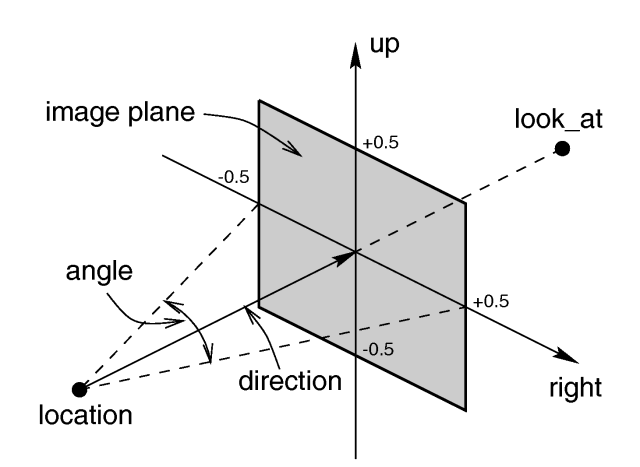

Image Plane

Given some camera specification, we need to be able to compute view rays. Let’s assume that our camera is sitting somewhere along the positive Z axis and is looking towards -Z. We want to define some image plane in 3D space. Then, we can map a given pixel in our output image to a point in this image plane, and use that to find the ray for that particular pixel.

For a given pixel , let’s define a set of coordinates coordinates . We’ll call these the “view space coordinates”. Where and are the output image width, height:

This will give us an image plane that stretches from to in view space coordinates. But, we want the image plane to stretch from to . We can accomplish this quite simply by shifting our coordinates:

Another problem with these equations is that we are getting the lower-left corner of each pixel box instead of the centers. Let’s say that we want to get the pixel centers (there are a few reasons why we want to, though the resulting image will be nearly the same):

There’s one other problem here.

The images that we are rendering will typically be rectangular, not a perfect square.

Most of the time we will render 640x480 images in this class, which has an aspect ratio of 4:3.

If we use this square image plane we will get squashed images that distort the relationship between horizontal and vertical scale. There are two equivalent ways to solve this problem. We could stretch the image plane along the horizontal axis by .

However, we will eventually be multiplying these view space coordinates by the basis vectors of our camera.

We can use a “right” basis vector with length to account for the aspect ratio at that time.

If you take a look at any of the .pov files for this class, you will notice that the “right” vectors are scaled in this way.

View Space

Definitions

It is common to define a coordinate system using a set of orthogonal basis vectors. Our world space coordinate system is defined by the orthogonal basis vectors , , and . We will define our view space basis vectors , , and . These are often called the “camera basis vectors”.

We can find our basis vectors using the camera specification in our .pov files:

camera {

location <0, 0, 14>

up <0, 1, 0>

right <1.333, 0, 0>

look_at <0, 0, 1>

}

is our right vector (note that it is not normalzied).

is our up vector.

Here we run into a problem, however.

location and look_at describe positions, not vectors, but there is another problem.

We have a right-handed coordinate system, which means that must be the right-handed cross product of and .

However, that gives us a that points in the opposite direction of where the camera is looking.

We will account for this by defining as the opposite of the “look” vector , which is the direction the camera is actually looking.

The look vector can be found by simply normalizing the difference between look_at and location:

Transformations

Given some coordinate in view space, , we can find that position transformed into world space, , using the following equation:

Where is the origin of the view space system expressed in world space coordinates, otherwise known as the “camera position”.

We have our and and defined above, but what is ?

Geometrically, this is the distance the image plane is pushed along the viewing axis. This distance is usually referred to as the focal length. In this class we will use a focal length of 1 since that results in a reasonable field of view and is also simple. Remember that our basis vector points in the opposite direction, so we define:

Don’t forget that we leave our right vector un-normalized, despite apparent convention, to account for the aspect ratio down the line. You can just think of it as a normalized basis vector which has been scaled up by the aspect ratio.

Camera Rays

With all of these components we are ready to define the rays (in world space coordinates) for each pixel. For each ray we need an origin point and a direction. The origin point is easy - all rays originate from the camera location.

For direction, we should normalize the difference between the pixel’s position on the image plane (transformed to world space) and the camera origin.

Plug these in to get the final ray equation.